When you walk into the Akola district police control room, nothing looks dramatically different at first glance, no giant blinking screens, no futuristic dashboard. Yet, behind the quiet hum of activity, a silent revolution is underway. The transformation is driven not by gadgets, but by data and by an IPS officer determined to change how policing thinks. Akola is emerging as one of India’s boldest testing grounds for predictive policing. Under SP Archit Chandak, the district is piloting TRINETRA—an AI-driven system that turns scattered crime data into real-time intelligence. By tracking repeat offenders, predicting hotspots and guiding targeted patrols, TRINETRA promises to shift policing from reactive to preventive in the years ahead.

For Akola district SP Archit Chandak, the project began with field-driven experience and realization. “More than 60 percent of body crime offenders in districts like ours are repeat offenders, yet the data needed to track them is scattered, non-standard and rarely available in real time.” Officers had always known this well—but there was no unified way to see the full picture.

This gap, documented over months of ground-level work, meant beat officers, investigators and senior officials were often flying blind. The reality was simple: policing was reactive because the information ecosystem itself was fractured. ‘’Crime records were plentiful—case diaries, CCTNS entries, preventive files, beat notes. They all are there, but all are at different places and are not stitched together.’’ Decisions depended on individual memory and intuition rather than structured intelligence. The stakes were high. As the team put it, officers did not have “a system that tells them who is actually reoffending, when patterns are accelerating, and where preventive action is needed today.”

TRINETRA was conceived as a response to that urgency—not as a tech experiment, but as an operational necessity. The designers built it around a simple purpose: “to solve three fundamental gaps: repeat offenders not being tracked scientifically; data not collated across systems; real time intelligence not reaching officers who need it.”

THE HEART OF THE SYSTEM : A SCORE THAT MAKES THE INVISIBLE VISIBLE

At the centre of TRINETRA lies the RORS- Repeat Offender Risk Score, the engine that transforms raw police data into a single, actionable signal for the field.

The system pulls together FIR histories, preventive action records, MO patterns, hotspots, behavioural trends and beat intelligence. It then evaluates the “recency, frequency and severity” of past offences to build a live, continuously updating profile of an individual.

This is what gives officers clarity within minutes—clarity they previously struggled to access even after hours of paperwork and calls. But one design rule was kept sacred: human judgement would never be replaced. “The model never auto triggers any action. Officers review the risk, cross check it with local intelligence, and then decide on watchlist inclusion, targeted beat patrolling, counselling, or preventive actions.”

In other words, AI informs; humans decide.

THE GROUND REALITY : PATROLS, PATTERNS AND PREVENTION

The visible impact of TRINETRA is felt most strongly on the ground.

Beat patrolling has become sharper. Areas hosting high-risk repeat offenders get focused attention. Patrol timings now sync with the district’s crime clock—late-night theft bursts, assault-prone timings, or weekend spikes. Investigators, too, find themselves a step ahead. When a new offence is reported, TRINETRA instantly highlights matching MOs or behavioural patterns—accelerating what once took hours.

Perhaps the most transformative shift is in prevention. Officers can now see which individuals are slipping back into potential reoffending patterns weeks in advance. “This allows for early counselling, check-ins, targeted monitoring and even environmental interventions—fixing a broken streetlight or increasing visibility in a vulnerable corner.”

The project is still in its pilot phase, but early indicators are promising. A perceptible dip in reoffending, quicker investigative leads, and significantly more efficient manpower deployment.

THE GROUND REALITY : MORE THAN DATA, IT’S HUMAN POLICING

Despite its technological sophistication, Akola’s model is deeply rooted in traditional policing discipline and human interaction.

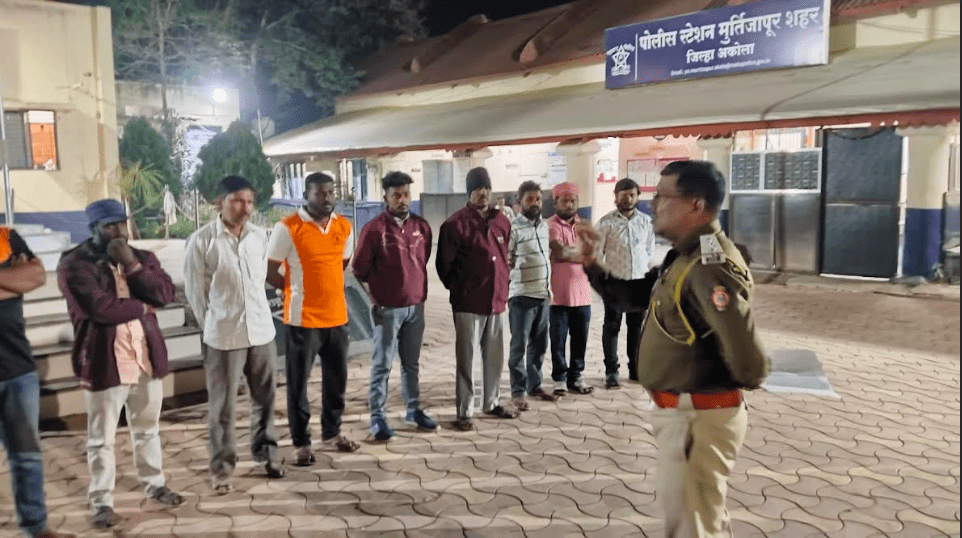

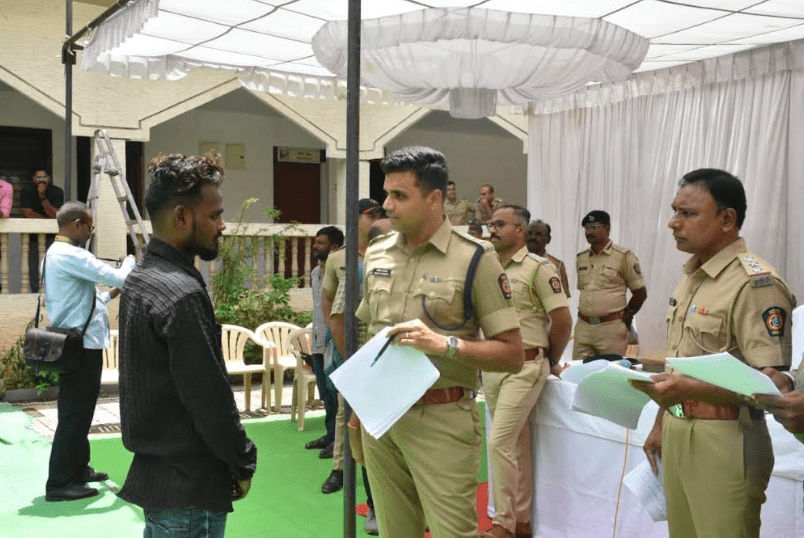

“We just don’t focus on data sets but remain active on the ground. Every Wednesday we take Wednesday parade. We also call the offenders to the police station. We monitor their source of income, their behavioral patterns towards crime. This goes for 8–10 hours.”

These sessions are exhaustive. Officers meet repeat offenders face-to-face, understand their stressors, financial conditions and triggers. The police also work with NGOs for counselling. “Anybody in need of jobs are helped, like housekeeping jobs etc.”

Chandak calls it empathetic policing—a parallel track where enforcement merges with rehabilitation. “We are integrating them into society and helping them change and be responsible citizens.”

The data pool is vast: Akola has 2,000+ habitual criminals, categorized meticulously into body crimes, property crimes and special law offences. And the trends are revealing. One of the most intriguing insights is that offenders aged 30 and above show reduced frequency and severity of crime—information now used to refine interventions.

WHAT SETS TRINETRA APART IS ITS REFUSAL TO COMPROMISE ON ETHICS

SP Chandak explains its foundational rule: “no caste, religion, community or socio-economic markers” are used. The model is trained to predict behavior—not identity. Any proxy variable with the potential to encode bias is deliberately excluded.

Every prediction leaves an audit trail. Fairness checks run constantly. And the technology remains intentionally simple: “If we cannot explain a prediction, we do not use it.”

THE ROAD AHEAD : AN EMERGING POLICING MINDSET

For SP Archit Chandak and his team, the goal is not just better technology—it is better policing. A system that anticipates rather than reacts, one that empowers officers rather than replaces them, and one that may someday help shape statewide or even national standards for ethical AI in law enforcement. In Akola, that future is no longer theoretical. It is already taking shape, one data point, one pattern and one informed decision at a time.