As artificial intelligence rapidly reshapes the way societies function, policing stands at a crucial crossroads—where technology can enhance efficiency, foresight, and public safety but also raise serious questions about ethics, bias, and civil liberties. From predicting crime patterns to countering deepfakes, phishing scams, and digitally driven misinformation, law enforcement agencies are being pushed to adapt at an unprecedented pace.

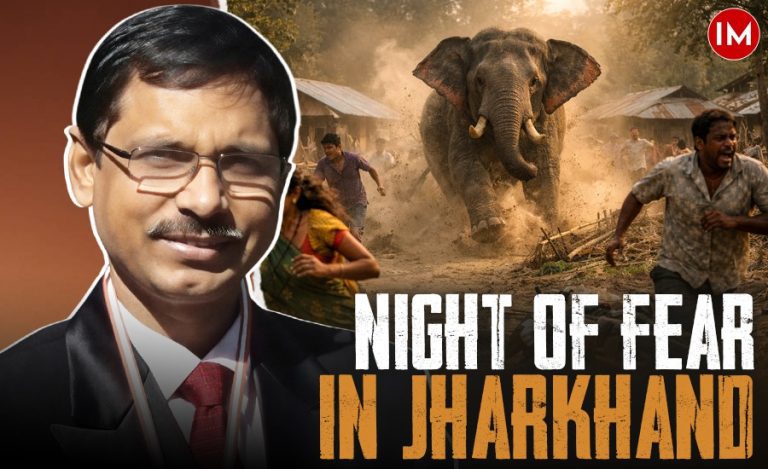

To explore how AI can be responsibly integrated into policing without undermining accountability or public trust, Indian Masterminds spoke with Sai Krishna P Thota, a 2014-batch IPS officer of the Madhya Pradesh cadre, currently serving as the Superintendent of Police, Narmadapuram. Drawing from his operational experience and a clear-eyed understanding of emerging technologies, he explains how AI can assist—not replace—human judgement in building a smarter, faster, and more transparent policing system.

Q1. How can AI-driven pattern analysis and predictive models help police identify crime hotspots, anticipate threats, and respond faster—without compromising civil liberties?

AI can help policing in three broad ways: planning, prediction, and optimization.

First is planning. Police already possess vast amounts of data—from crime records on CCTNS, emergency response numbers like 112, disaster management inputs, transport and RTO databases, telecom data, banking information, and jail records. The challenge is not lack of data but analysing it meaningfully. AI allows us to study this data holistically to identify crime hotspots, understand the nature of crimes, and deploy resources more efficiently. What earlier required manual analysis using spreadsheets can now be done instantly through heat maps and pattern recognition.

Second is prediction. Policing has traditionally relied on experience—for example, knowing that certain crimes increase during winter nights in North India. AI strengthens this intuition by learning from historical data. It can predict possible crime trends, risks arising from release of certain offenders, or potential violence around sensitive dates and events. This helps police prioritise threats proactively.

Third is optimization. Police function with limited manpower, vehicles, arms, and investigation tools. AI can help optimise route planning, resource deployment, and inter-police-station coordination to ensure maximum efficiency with minimum resources.

At the same time, concerns about civil liberties and bias are valid. AI systems learn from data, and data may contain historical biases. Therefore, AI should never be treated as a final decision-maker. There must always be a human in the loop. Officers must understand AI outputs, question them, and correct biases where necessary. AI should assist decision-making—not replace it.

Q2. With deepfakes and AI-generated misinformation becoming common, what strategies can police adopt to counter these digital threats?

The first step is identification. While deepfakes are becoming more realistic, AI itself is a powerful tool to detect them. There are already tools that can analyse videos or images at a pixel level and identify whether they are AI-generated.

The second step is verification. Once content is flagged as fake, police must quickly establish the truth using available data and intelligence resources.

Third is rapid dissemination of truth. Once verified, correct information must reach the public quickly to neutralise misinformation.

Fourth is legal action. Those responsible must be identified and penalised to deter repetition.

Finally, police personnel must be trained continuously so they can identify, verify, and respond to such threats faster and more effectively.

Q3. Generative AI tools are now being misused for phishing, scams, and even malicious coding. How prepared are law enforcement agencies to deal with this challenge?

This is a serious concern. Generative AI can create realistic voice clips, emails, and messages in multiple languages, making scams highly convincing.

At present, law enforcement is partially prepared. Agencies like the Indian Cyber Crime Coordination Centre (I4C) are doing commendable work. States are also collaborating with ethical hackers and private consultants under public–private partnership models.

Tools like WormGPT are particularly dangerous because they lack ethical guardrails. Unlike responsible AI models, such tools can generate malicious code or fraudulent content without restrictions.

Our response strategy remains the same: early identification, fast verification, swift action, and legal reinforcement. However, we still need stronger institutional readiness, better detection mechanisms, and updated legal provisions to match the speed and sophistication of such threats.

Q4. As AI becomes more integrated into investigations, what safeguards are needed to ensure transparency and accountability?

First, we must accept that AI integration is inevitable. The sooner we adapt responsibly, the better.

The core principle is that AI must never be the final authority. The investigating officer remains fully accountable. AI is only a tool.

Second, all AI-generated outputs must be clearly logged and labelled so it is known whether an output was human-generated or AI-assisted.

Third, autonomous AI systems—where used—must have overridable actions and complete audit trails.

Fourth, data ownership and security are critical. AI models are often owned by large corporations, and there is a risk that sensitive police data could leak into external training systems. Strict compartmentalisation and data protection frameworks are essential.

Finally, there must always be a clearly identifiable human authority legally accountable for AI-assisted decisions.

Q5. How will AI impact India’s internal security architecture?

AI will have a profound impact. It can help predict unrest, identify early signs of radicalisation, assist in deradicalisation efforts, decode terror-financing patterns, detect drone misuse, and counter deepfake-driven misinformation.

However, this also means stronger ethical and legal frameworks are required. Individuals should not be allowed to evade responsibility by blaming AI for criminal acts. Accountability must always rest with humans.

Q6. Will AI improve police response time and operational efficiency?

Absolutely. A significant portion of police work involves drafting, documentation, and planning. Large Language Models can greatly reduce this burden.

During investigations, AI can help officers by suggesting overlooked aspects based on crime scene inputs. It can support planning, prediction, and resource optimisation.

AI will not replace policing skills, but it will significantly enhance efficiency and effectiveness.

Q7. Is there any important aspect of AI in policing that is often overlooked?

Yes—the judicial interface.

Digital evidence, AI-generated analysis, and cyber records must be presented in courts in a way that is both legally admissible and easily understood. Just as pen drives and digital documents required procedural adaptation, AI-based evidence demands a rethinking of how investigations are presented and appreciated by the judiciary.

This is a critical area that requires serious institutional focus and long-term planning.